IA evaluation is critical to laying the groundwork for a successful IA design. The primary concern of an IA evaluation is the findability of information. Factors affecting findability include the organization or structure of the information, the means to navigate it, the language used to label its parts, and the alternative routes available to access the information.

The four most common and effective IA evaluation methodologies are expert evaluation, analytics review, card-sorting, and findability studies.

Expert IA Evaluation

An information architecture evaluation is an assessment of an existing information space by two or more information architecture experts. The IA evaluation team looks for potential problems with information structure and labeling by conducting:

- A basic content analysis to enumerate and describe the content of a website or application, including evaluation of consistency and appropriateness of labeling and information grouping hierarchies.

- A findability analysis to identify and evaluate available paths to content, including evaluation of global navigation, cross linking, display of the user’s current position, and ability to return to a previous position.

- A search analysis to evaluate the performance of the search function, including how accurate and forgiving it is, readability of results, and ease of refining a search.

The results of an expert IA evaluation include findings about problems as well as successful features, recommendations for restructuring the site organization, and high-level conceptual diagrams of a suggested IA redesign. Also see TecEd’s heuristic evaluation methodology description.

Analytics Review

If your organization has analytics data for the site or application being redesigned, a review of analytics reports can reveal areas where site traffic does not follow desired patterns. How visitors move through your site or application space, where they abandon process paths, and whether they return repeatedly to the same page as if looking for something they cannot find can tell us where the current information architecture hinders visitors’ progress.

TecEd can also provide consulting to set up an analytics tracking and reporting process.

Card Sorting

Card sorting is an effective way to learn about how users group, label, and prioritize information. The term “card sorting” refers to the original method of using physical cards; today’s methods often rely on Web applications.

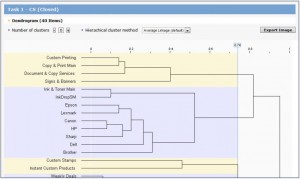

In a typical card-sorting exercise, participants sort terms or images representing content areas, tasks, or documents into groups that seem logical to them. They may sort these under provided names (closed card sort), or they may provide names for the groups they created (open card sort).

Card sorting can reveal users’ “mental models.” For example, you can learn how users visualize the structure of a website, what types of content they expect to find there, where they expect to find it, what they expect it to be named, and how they expect to navigate to it. The results can inform decisions about site organization, navigation, labeling and terminology, the site map, specialized site indexes, home page content, and more.

Card sorting provides both quantitative and qualitative data on which to base design decisions. For example, you can examine how many times any two given terms were grouped together (a high number suggests the grouped content should appear in the same content area), and then examine the names that users gave to the groups in which they placed the pair (common or repeated terms suggest a label for the content area).

As with other usability methods, it is important to focus on the most important or problematic areas. TecEd designs card-sorting studies to include representative terms that can stand for an entire class of similar cards. Conducting an expert IA evaluation can help focus a card sort on key areas in the information space, and can provide seed groupings in closed or semi-closed card sorts. Conducting user interviews to supplement card sorting can help “fill in the gaps” by eliciting what additional content areas are important to participants.

As with other usability methods, it is important to focus on the most important or problematic areas. TecEd designs card-sorting studies to include representative terms that can stand for an entire class of similar cards. Conducting an expert IA evaluation can help focus a card sort on key areas in the information space, and can provide seed groupings in closed or semi-closed card sorts. Conducting user interviews to supplement card sorting can help “fill in the gaps” by eliciting what additional content areas are important to participants.

Findability Studies

Findability studies are used to validate how easily users can find specific items in the information space being designed. Findability studies can be conducted through moderated or unmoderated usability testing. In both cases, users see a representation of the current or proposed navigation structure, without other distracting elements. The tasks are information-finding scenarios.

Web applications are becoming increasingly available for conducting unmoderated findability studies. While the reports from these studies clearly show the paths users followed and the backtracking done, they require follow-up contact or additional moderated testing to determine the reasons and expectations users had for choosing specific pathways.